There are many reasons why it is difficult to prove whether programmes work or not. As part of a series drawing out lessons from our evaluation work, The Strategy Unit’s Impact Evaluation Lead Mike Woodall looks at how programme designers can maximise the chances of success.

The NHS invests billions of pounds in new programmes every year. All set out to improve outcomes for patients and to be cost effective. And none set out to fail. They therefore need evaluation to show that their programme delivered what it set out to.

And yet, all too often, this is simply not possible. This is not always because the programme isn’t delivering improvements. Often it is because the programme has been designed and implemented in a way that makes robust impact evaluation impossible.

Why does this happen? Despite some improvement, it is still true that evaluation is often considered late in the day, rather than built into the programme concept. This means the programme is fully designed - and often being delivered - before the evaluation is even commissioned. While understandable (programme design is often frenetic), this means that the potential benefits of evaluation can be lost.

This is most obvious when designing a method for any impact evaluation. For example: short programmes leave little time to show impact; and programmes rolled out nationally ‘all at once’ make impact evaluation virtually impossible. As an evaluator, there are few things more frustrating than having to ‘be pragmatic' when a few simple tweaks to programme design would have made a high-quality study possible.

What would better look like?

The answer to this is relatively straightforward: if your programme needs to show impact, then you should bring in evaluation expertise as part of programme design.

And, while no substitute for high-quality and tailored support, there are several things that evaluators would advise. For example:

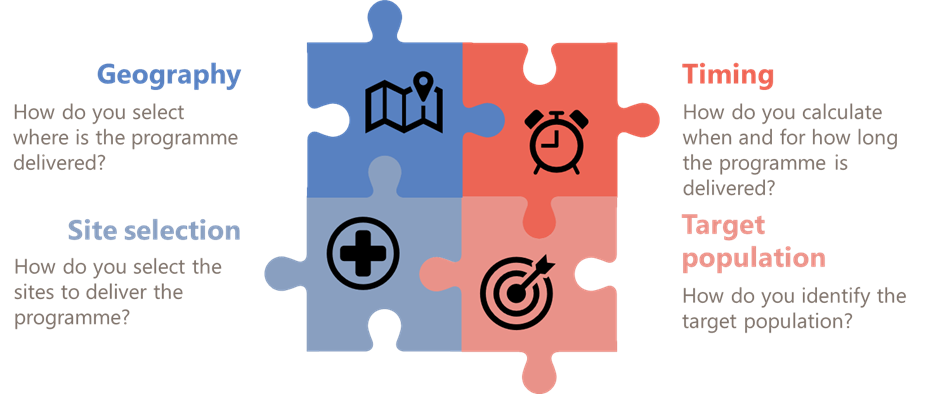

- Choosing the right geography to roll out the programme. Rolling it out through local pilot sites will allow for ‘control sites’ (places where the programme is not implemented) to be included in the analysis. The trade-off here is that it will take longer to implement the intervention. All the reports we write on programmes rolled out nationally have the same caveat. Other factors or interventions may have influenced the outcome and therefore we cannot be sure of the programme’s impact. This caveat is included whether the programme shows a positive impact, a negative impact or no impact

- Programme length. Longer programmes have more time to demonstrate impact, although funding is often only available for a short period of time (see a previous blog on the challenges of evaluating short-term programmes for more on this). If there is immediate pressure for results, it is worth asking whether the problem is durable or temporary. If the problem is durable, then a rush for results is unlikely to be necessary. We regularly delay our analysis or recommend the impact evaluation is re-run at a later date when we don’t have enough time to demonstrate impact

- Site mix. Identifying a reasonable mix of sites will allow for the results to be more generalisable, but these sites need to be engaged to ensure the intervention is rolled out as widely as possible

- Target populations. Being able to identify the eligible population in the data will make the evaluation more focused, but some programmes are rightly targeted at the whole population. It is frustrating knowing you are wasting NHS resources trying to evaluate the impossible. We have tried different methods and approaches. Not being able to isolate the target population has meant that some of our evaluations have shown no impact, even where logic suggests they should have had some impact.

Key considerations for designing your programme to support evaluation

Not every programme needs to work through these considerations. But for programmes that want to prove that what they did caused the desired results, then there are few shortcuts. And repeatedly running programmes that can’t be evaluated dooms later programmes to repeating avoidable mistakes. What looks efficient in the short term can be wasteful over time.

It is well-recognised that the NHS needs better impact evaluation. Programme designers have a key role to play here: it is their choices that decide whether evaluation is possible or not. To help this process, we have produced this short report where we expand on the suggestions introduced here that programme designers and leaders should consider if they want to demonstrate the impact of their work.